A traditional SQL DBMS stores data in the records and fields (rows and columns) of a collection of flat database tables. All tables have the same rectangular structure in SQL. A SQL database is relational because of the relationships set up between the different tables.

In an relational DBMS (RDBMS), information about a single record can be combined from multiple tables by relating values in matching columns. This helps to eliminate redundant data; beyond that, however, an RDBMS does not support any additional structuring of the data itself.

By contrast, the MDM system supports a variety of different table types that are specifically suited for the particular requirements of storing, organizing, structuring, classifying, managing, and publishing information in an MDM repository (including efficient support for category-specific attributes, which are inherently non-relational), as shown in the following table.

Table Type

Description

Main table and subtables

Flat

Main table or subtable. A flat table has the standard, rectangular SQL structure consisting of records and fields (rows and columns). The main table of an MDM repository is always a flat table.

Hierarchy

Subtable. A hierarchy table organizes information in a hierarchy, where each record is related to a parent record (even if the only parent is the root) and may also be related to sibling records and/or child records. The main table in an MDM repository typically contains some fields whose data may be hierarchical in nature. For example, a Manufacturer field may need to accommodate division and subdivision information for manufacturers. This hierarchical information is stored in a separate, hierarchy subtable associated with the Manufacturer lookup field in the main table. Most of the hierarchy tables used in an MDM repository contain lookup information for fields in the main table. Other hierarchy tables in MDM include taxonomy tables, the Masks table, and the Families table, described below. MDM supports hierarchies with an unlimited number of parent/child levels.

Note that a hierarchy table is useful even when it is flat (i.e. only leaf nodes below the root), because it stores the ordered sequence of sibling records, allowing you to override the unordered sequence of values in a flat table and instead put the values in a fixed order.

Taxonomy

Subtable. A taxonomy is the classification scheme that defines the categories and subcategories that apply to a collection of records. Categorizing records enables you to isolate subsets of records for various organizing, searching, editing and publishing purposes.

A taxonomy table in MDM stores a hierarchy of categories and subcategories and also supports attributes, “subfields” that apply to particular categories rather than to the entire collection of records. MDM supports multiple simultaneous taxonomies.

Qualified

Subtable. A qualified table in MDM stores a set of lookup records, and also supports qualifiers, “subfields” that apply not to the qualified table record by itself, but rather to each association of a qualified table record with a main table record. MDM supports multiple simultaneous qualified tables.

Qualified tables can be used to support product applications and application-based search, and also to store any large set of subtable records that contain fields whose values are different for each main table record, such as multiple prices for different quantities, divisions, regions, or trading partners, cross-reference part numbers, and additional distributor/supplier/customer-specific information for different distributors, suppliers, or customers.

Object tables

Images

A single table named Images. Stores image files, where each image is stored as a record in the table.

Text Blocks

A single table named Text Blocks. Stores blocks of text, where each text block is stored as a record in the table.

Copy Blocks

A single table named Copy Blocks. Stores blocks of text interpreted as copy, where each text block is stored as a record in the table.

Text HTMLs

A single table named Text HTMLs. Stores blocks of text interpreted as HTML, where each text block is stored as a record in the table.

PDFs

A single table named PDFs. Stores PDF files, where each PDF is stored as a record in the table.

Sounds

A single table named Sounds. Stores sound files, where each sound file is stored as a record in the table.

Videos

A single table named Videos. Stores video files, where each video file is stored as a record in the table.

Binary Objects

A single table named Binary Objects. Stores other binary object files, where each binary object file is stored as a record in the table.

Special tables

Masks

A single hierarchy table named Masks. In concept, a mask acts like a stencil, in that it blocks (“masks”) all main table records from view except the defined subset of records that are included in the mask, to allow the subset to be viewed and manipulated as a whole. A mask is a static snapshot of the set of records that are included in the mask (as opposed to a view or a named search, where the results set is determined dynamically every time the search is run). Each record in the Masks table is the name of a subset of main table records. MDM supports an unlimited hierarchy of masks.

Named Searches

A single flat table named Named Searches. A named search is a static snapshot of the search selections that were in effect when the named search was saved (as opposed to a mask, which is a snapshot of the subset of records), where the results set itself is determined dynamically when it is selected. Each record in the Named Searches table returns a subset of main table records. MDM supports 400 named searches per repository.

Families

A single hierarchy table named Families. Used to further partition main table records in each category into smaller groups based upon the values of other fields and/or attributes. You can associate family data (a paragraph, an image, bullets) once with a family of products rather than with each individual product, and also define the table layout of the field and/or attribute data (field order; stack, vertical, and horizontal pivots; and other display options). This table is available only in Family mode.

Image Variants

(Does not appear anywhere in the MDM Client)

A single table named Image Variants. Used to define the structure and format of each of the variants for each image. Each variant is a modified version derived from an original image; the original image is never modified. This table is managed in the MDM Console and is not visible in the MDM Client.

Relationships

(Does not appear anywhere in the MDM Client)

A single table named Relationships. Used to define each of the different record-level relationships. Each relationship can be either bidirectional (sibling) or unidirectional (parent-child). This table is managed in the MDM Console and is not visible in the MDM Client, although the relationships between records can themselves be created and edited in Record mode.

Workflows

A single table named Workflows. Stores the workflows of an MDM repository, where each workflow is stored as a record in the table. Workflows are created and edited in the MDM Client.

Data Groups

A single hierarchy table named Data Groups. Stores the hierarchy of data groups used to break the entire set of objects in the MDM repository into manageable subgroups.

Validation Groups

A single hierarchy table named Validation Groups. Stores the hierarchy of validation groups used to organize multiple validations for subsequent execution as a group.

System tables

Roles

(Does not appear anywhere in the MDM Client)

A single table named Roles. One of three tables used to implement MDM repository security and access control. Each role can selectively grant or deny access to any MDM function and to any table or field. This table is managed in the MDM Console.

Users

(Does not appear anywhere in the MDM Client)

A single table named Users. One of three tables used to implement MDM repository security and access control. Each user can have one or more roles. This table is managed in the MDM Console.

Logins

(Does not appear anywhere in the MDM Client)

A single table named Logins. One of three tables used to implement MDM repository security and access control. Contains an entry for each currently connected MDM client application, which can be terminated by the MDM Console user.

Change Tracking

(Does not appear anywhere in the MDM Client)

A single table named Change Tracking. Allows you to specify the fields for which adds, modifies, and deletes should be tracked and stored in the Change Tracking table.

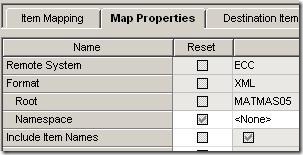

Remote Systems

(Does not appear anywhere in the MDM Client)

A single table named Remote Systems. Used to define the different remote systems for import and export. Each remote system specifies whether it supports import only, export only, or both.

Ports

(Does not appear anywhere in the MDM Client)

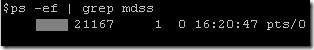

A single table named Ports. Used to encapsulate the logistical and configuration info for inbound and outbound processing of MDM data, for consolidation and distribution respectively.

URLs

(Does not appear anywhere in the MDM Client)

A single table named URLs. Used to specify the URLs that can be used as the target of an embedded browser in the Web tab in the MDM Client.

XML Schemas

(Does not appear anywhere in the MDM Client)

A single table named XML Schemas. Used to identify the XML schemas for import and syndication. Each XML schema is the name of an .xsd file.

Reports

(Does not appear anywhere in the MDM Client)

A single table named Reports. Contains an entry for each report file generated by the various MDM repository operations, which can be accessed and viewed by the MDM Console user.

Logs

(Does not appear anywhere in the MDM Client)

A single table named Logs. Contains an entry for the log files generated by the MDM Server, which can be accessed and viewed by the MDM Console user.